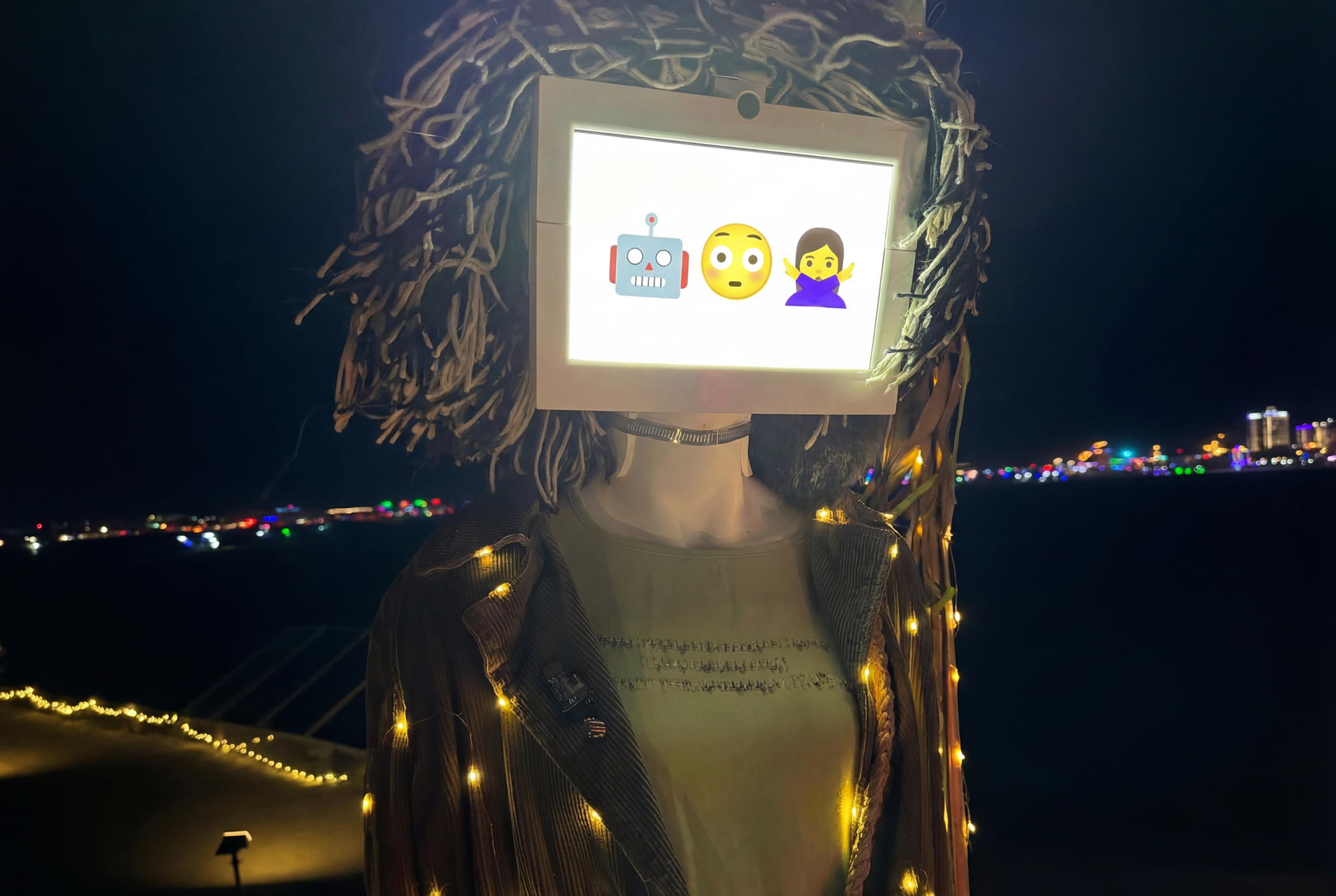

We brought AI to Burning Man before everyone learned to hate it. The GPT-3.5 boom was still fresh in our minds, and some feared that Stable Diffusion 1.5 was the end of art as we know it.

The world’s view of AI has evolved quite a bit since then, and so has our own. We aren’t drowning in a flood of “cats in the style of Van Gogh” — and in fact, perhaps, AI images have taught us to appreciate the value of human art a little bit more. But outside of AI images, we believe that AI is slowly becoming an art medium on its own, a medium that blurs the line between the viewer and the art itself.

In certain ways, this new medium aligns so well with Burning Man culture. The best AI art we saw on the Playa engages you in unique ways, prompting you to self-express on the spot and resulting in a co-creation of experiences that exist in the moment just for you and can never be repeated.

We were happy to see quite a few great AI art pieces on the Playa in 2025. Most worked and were amazing! Just as with our very own first attempts, technical problems were not uncommon — to put it lightly :-)

In this post, we’d like to share our learnings, mistakes, and solutions for bringing interactive, AI-powered art to the Playa and making it survive the week — based on our experience doing it three times in 2023, 2024, and 2025 (with intermittent success).

We’ll share both general guidance and specific examples from our art — MIRA. You can read more about the artistic side of Mira on our project's Instagram.

Can your LLM Survive a Dust Devil?

The best AI art is also great physical art — so all the usual challenges of building art on the Playa apply (we won’t cover them here). In this post, we’re focusing on the technology challenges: how to get LLMs/text2image models/etc. to work in the desert. The first and most important choice you have to make is whether to run locally or rely on the internet and the cloud (we tried both). Then, you need a plan to deal with the heat, the dust, the rain(!), the sun, and, last but not least, the clash of big tech with the Playa culture.

Local LLMs vs. the Cloud

TL;DR: if radical self-reliance and complete freedom of expression are the way of your heart, and you have someone technical (passionate about local LLMs) on your team, and you’re willing to spend a lot of time and money on it — go local. Otherwise, definitely go cloud.

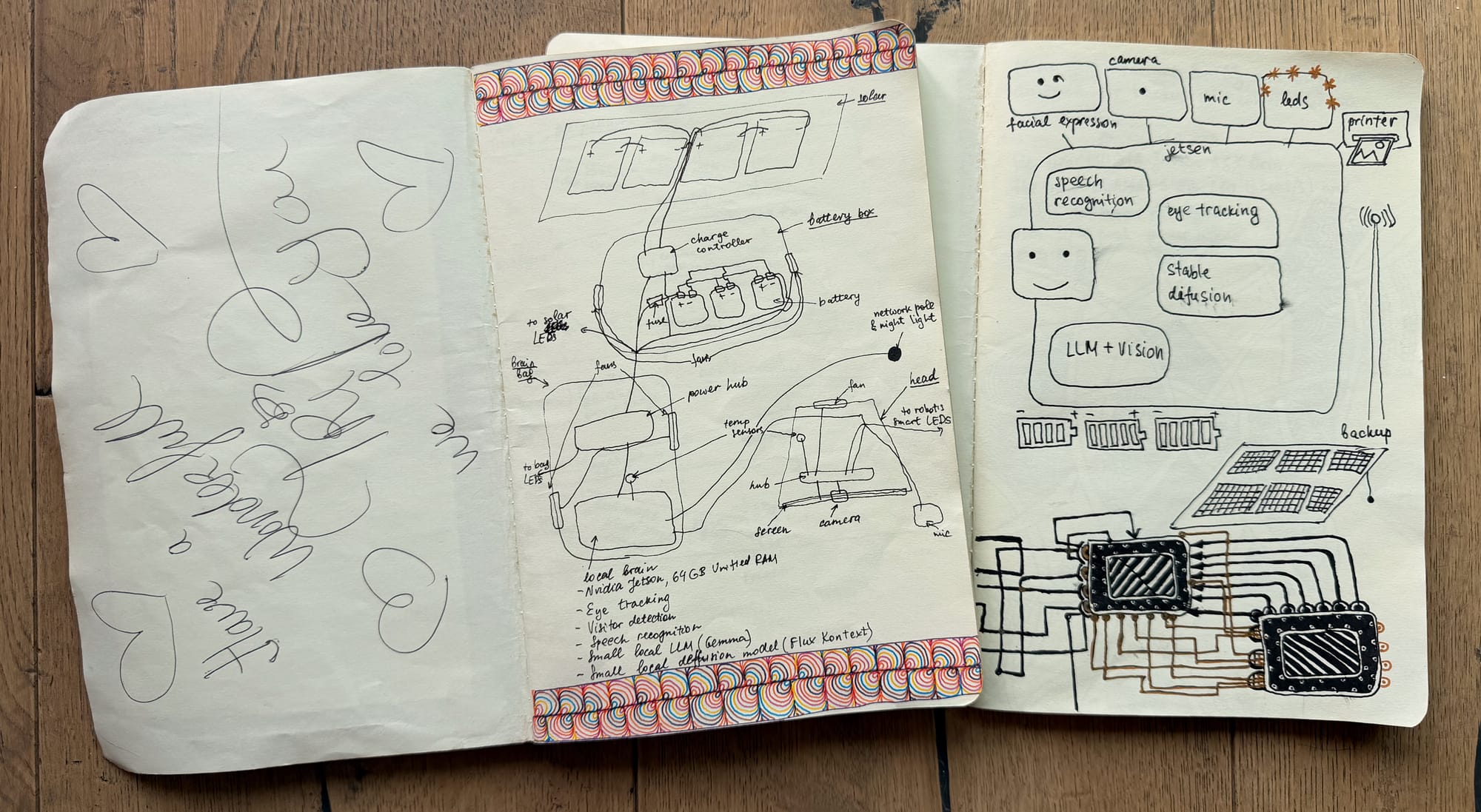

Running AI models locally means fast responses, no connection-related downtime, and — most importantly — it’s the only true form of Radical Self-Reliance your art can have. But… GPUs were never built to run in a desert. Compared to running in the cloud, it gets way more technical, more expensive, limits the size of the models you can run, and increases the chance that it’ll all break mid-burn. If that sounds like a lot of fun to you — we’re in the same boat!

Using the cloud is significantly easier and you’re getting higher tokens-per-second for any model you want. But you’re relying on the internet connection. Even with Starlink, latency and packet drop can and will make your art a little slug every now and then. It somewhat depends on what LLMs do in your art, but in our case (realtime) we consistently measured faster response times and better interactivity when running locally.

Unfortunately, most major Cloud API providers — and especially Google and OpenAI — don’t believe in the 10 Principles at all. In particular, don’t expect much of Radical Inclusion from them — you will see condescending responses, rejections, and bans on many topics common on the playa and near and dear to Burners’ hearts. If that’s important for you, pick a cloud provider accordingly.

We ended up flip-flopping between the cloud and local: cloud in 2023 (no great local LLMs existed back then), all-local in 2024 (our most successful iteration, also the most satisfying to build!), and back to the cloud in 2025 (we hoped for better models and, naively, for lower latency — but all in vain). Guess what: we’re definitely back to local next time.

The Heat

Most consumer electronics have ambient operating temperatures up to 95°F or so. Even though sometimes they may work when it’s hotter, many devices will actively throttle performance (which is particularly bad for local LLMs) or even shut themselves down when it gets hotter.

The safest bet is to look for “industrial” electronics (e.g., “industrial PC”). Either way, you should carefully check the operating temperature (usually listed in device specifications) of each component you’re using (not just computers — things like USB hubs, flash drives, cameras, displays, etc. can all cause problems).

Since most devices produce their own heat, you need a way to dissipate it into the environment. You almost certainly need active fans for that — which, if done right, can also help solve your dust problems (see below).

For our art, we selected components that can survive at least 150°F/65°C. It paid off: even though 2023–2025 were relatively cold years, the temperatures in the enclosures around the electronic components got very close to that:

- Mira’s head (which had a screen, camera, a USB hub, and a small active cooler) reached 140°F during the day — mostly because of the screen getting really hot at high brightness levels.

- Mira’s luggage (which hosted the computer and batteries) reached 113°F. That was quite nerve-wracking for us — the batteries we used in 2024 were the weakest link and would auto shut down at 114°F. We switched to 150°F-rated batteries in 2025.

Alternatively, you can make your piece run only at nighttime — which can be a great choice especially if it fits the art’s narrative!

The Dust

IP68-rated electronics should be safe, but are often more expensive. In our case, we simply couldn’t find all the components we needed with that rating.

You can protect your electronics by putting them in a box, but keeping it airtight is hard in practice — and Playa dust will find every tiny crack to get in! Even if you could avoid any cracks, you’d still likely want to leave some vents open to dissipate the heat your electronics produce.

The tried-and-true Burning Man solution comes in handy here: positive air pressure. Use one or several fans to give your electronics box more air inflow than outflow, and protect the inflow with a MERV-13 filter. This way, the dusty air only gets inside through the filter and all other openings in your box are only letting air out, so the dust stays outside.

Mira’s head had only one filter-covered inflow 60mm fan. Even though it had plenty of holes without filters, it remained nearly dust-free.

Mira’s luggage (with the computer and batteries) had two large fans — funnily, most visitors assumed those were speakers — one inflow and one outflow (the 2nd fan helped to direct the airflow inside the box). To keep the pressure positive, we set the outflow fan to 25% of its max speed (using a PWM fan controller). The luggage did get a lot of dust inside — but only because we had to open it a few times on the playa to troubleshoot; thankfully, it didn’t cause any damage.

When choosing fans, keep in mind that the smaller the fan is, the louder and more annoying its noise will be (which makes little difference on the playa…).

The Rain

In 2023, our experienced burner friends told us they saw rain once in a dozen burns. So in 2023, we didn’t rainproof Mira at all, and it didn’t survive the rain. We thought that now that we’ve seen our rain of the decade, it won’t happen again. So in 2025… we didn’t rainproof her either. It did survive — miraculously. Please don’t repeat our mistakes. It’s not hard to keep your electronics box covered. As a pro tip: a MERV-13 filter is thick enough to keep most of the rain out, so you likely don’t need to worry about water getting in through the air intake.

Keep in mind that the Playa is not drivable a few hours after each rain (even minor ones), which complicates maintenance. You might want to keep a stash of critical supplies on site, and gear to get there on foot.

The Sun

Playa is unbelievably bright during the day. If your art needs a screen, get a really bright one. Sun position will matter, so plan for it and think about how to orient your art. Consider adding a shade. If all else fails, consider asking to have your art placed where there is shade — e.g., below the Man or in the Center Camp.

Test all your hardware and software if you suspect they can depend on the ambient brightness in any way.

We discovered a firmware bug in the camera we bought that only triggers on the Playa. At our sunny Californian home, we need to point the camera straight at the sun to replicate the bug. We think it’s an overflow somewhere in its auto-exposure algorithm and it causes the brightness to rapidly pulse.

Internet

You have two options: Starlink or Burning Man Internet. Starlink is the only option if you’re sending images or streaming audio to the cloud (for speech recognition or to use with an Omni-like model). It works, but gets less reliable when more people with Starlinks arrive throughout the week. Also, even the Mini version draws 20–40W (depending on the load), which could easily double your power budget.

Burning Man Internet is a little-known feature of Burning Man: https://internet.burningman.org/. You need an antenna and a direct line of sight to the tower at the Center Camp, but you get a reasonably stable (if not very fast) internet that draws just 8.5W of power. I wouldn’t rely on it for actual content, but we used it for monitoring and telemetry with great success — huge thanks to Burning Man IT!

(N)SFW

Just like the elements, naked people saying explicit things are an integral part of the burners’ culture and our principles of Radical Self-Expression and Radical Inclusion. Sadly, most LLMs and image generation models are trained to be judgmental prudes. At best, you’ll get rejections or boring responses and, at worst, your visitors may get lectured on morals when saying or showing anything even remotely NSFW. That… might not be well aligned with the artistic vision of your project.

The problem is aggravated when using cloud APIs like OpenAI or Google’s Gemini which usually have additional layers of censorship on top of the LLM itself. You’re at risk of your account being banned and your art shut down mid-burn (although this didn’t happen to us in practice).

We tried alleviating this problem by telling the model about Burning Man and the 10 Principles in its system prompt and begging it to practice Radical Inclusion. That somewhat helped reduce moral lectures, but didn’t prevent dull, boring responses.

The image editing model we used through the API would simply return an error when any nudity was present or even implied anywhere in the frame! Sadly, this excluded people in suggestive costumes or without costumes from fully enjoying our art.

A better way to solve this problem is to use uncensored models. You can use such models both locally or in the cloud (using NSFW-friendly LLM API providers or a rented VM). Leaderboards like this and countless threads on r/LocalLlama are very helpful for selecting good uncensored (or less-censored) LLMs. Civit.ai offers a vast selection of NSFW image generation and editing models (you do need to sign up and enable NSFW in settings to see those). However, keep in mind that using such models creates a real risk of having your art go to the other extreme, so please choose with care.

F* You Burn

Our team-of-two’s virgin burn of 2023 was all about Mira. In between building her, refreshing her supplies, troubleshooting and fixing her as she kept breaking, and fixing her code live as we observed some epic fails in her interactions during the event — we didn’t have time for much else. We don’t regret it for a second: we enjoyed every single bit of it.

The lessons learned made subsequent years easier. In 2024 and 2025, Mira turned on the first try and didn’t break even once in a major way. And in 2025, we got several volunteers providing invaluable help assembling and maintaining Mira (HUGE THANKS!). Even then, at least 50% of our time on these burns was devoted to Mira. We keep promising each other to come without art next time. Maybe. Someyear.

A few obvious things we learned the hard way:

- Test, test, test. Every year, we wanted to fully assemble Mira at home at least a month before the burn, to have time to test her end-to-end. We never did. When it comes to art, there is always that one more thing to improve… In retrospect, we should have tested a lot more.

- But then… No amount of testing will prepare your AI for its first burn. No amount of talking to your AI in your pajamas will match the creativity of the actual burners interacting with your art on the playa! Be ready to observe and adapt, and make it very easy for you to change your prompts on the fly during the event. We were spending at least a few hours each day on that.

- In retrospect, our entire burn in 2023 ended up more like a test run, and it’s hard to see how we could have done any better. We spent the exodus thinking about it and writing down every little thing we’d fix next year. If you want to iterate faster, consider regionals!